Designing Algorithms as Expressive Agents

— blurring the line between human and machine creativity

The Opportunity

As AI increasingly integrates into creative workflows, designers confront critical questions around authorship, originality, and artistic control.

NeuroCanvas explored how designers can collaborate with generative algorithms while retaining creative intent and ownership.

Reframing AI from an opaque “black-box” tool into a transparent and expressive partner.

The Insight

Generative AI often functions as a “black box”, producing outputs without revealing how they are formed.

For designers, this lack of transparency challenges authorship, originality, and control.

The project therefore centered on explainable AI as a way to move beyond opaque automation, making the underlying mechanics visible and enabling more intentional creative decisions

The Approach

We employed a hybrid design-research methodology combining generative modeling, performance evaluation, and AI explainability tools.

Two different datasets for style extraction

Two distinct datasets for content extraction

Methodology

We employed a hybrid design-research methodology combining generative modeling, performance evaluation, and AI explainability tools.

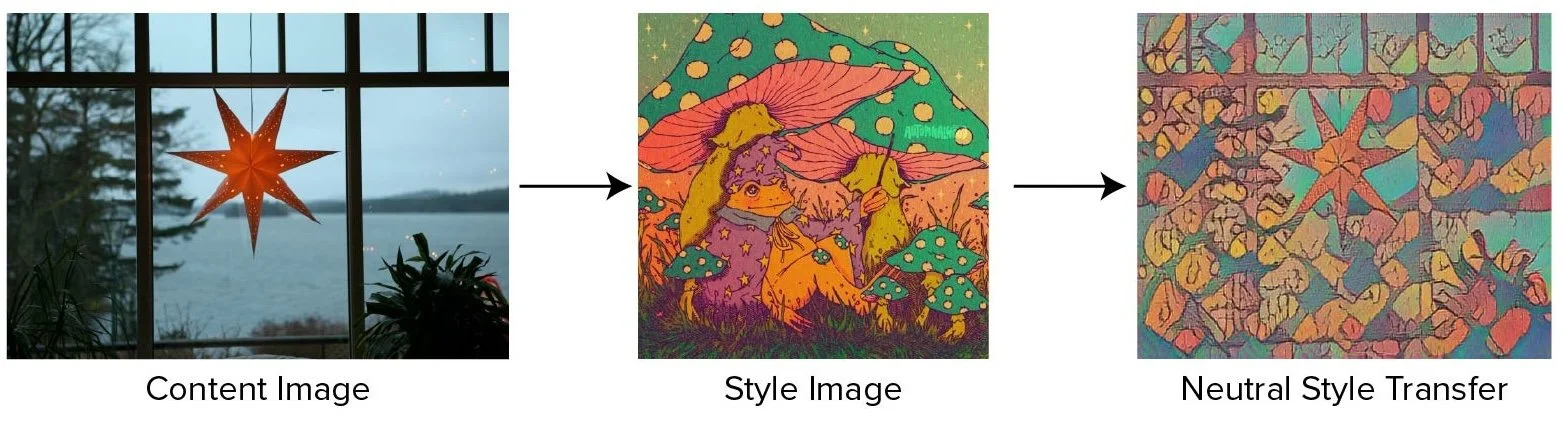

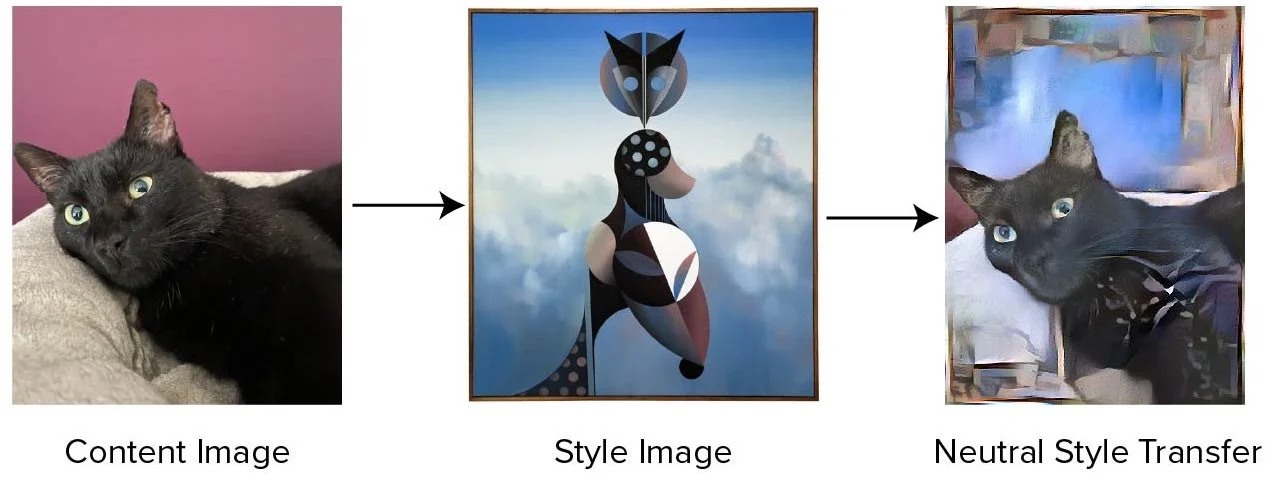

Neural Style Transfer (NST)

Developed and refined using TensorFlow with extensive parameter exploration.

VGG-16 Classification

Evaluated the balance of content preservation and style application in generated images.

SHAP & Deep Explainer

Visualized algorithmic decisions, clarifying how styles were applied and enabling designers to better direct AI Creativity.

Explaining the “Black Box”

Using custom-trained Neural Style Transfer (NST) models, we uncovered a central creative trade-off.

Model 1

Model 2

VGG 16- Classification

Style Classifier

Content Classifier

Model 1 strongly applied artistic styles but distorted content, while the other model 2 preserved content fidelity but diluted artistic expression.

SHAP Analysis

The analysis revealed how the models weighted visual features at a granular level, exposing the hidden mechanics of style transfer.

These findings highlighted the value of explainable AI in moving beyond black-box outputs, giving designers transparency and agency in shaping creative outcome

The Outcome

NeuroCanvas produced styled outputs and explainability maps that exposed the trade-offs between content fidelity and artistic expression.

By visualizing how AI applied styles through SHAP, the project made algorithmic decisions transparent and reframed AI as an expressive creative partner rather than a black-box tool.

The outcome underscored that while AI can generate, human intent and tuning remain essential to shape meaningful and ethical results.